Overview:

In this tutorial, I would like to show how to implement gRPC load balancing functionality to distribute the load across all the servers using Nginx.

If you are new to gRPC, I request you to take a look at these articles first.

- Protocol Buffers – A Simple Introduction

- gRPC – An Introduction Guide

- gRPC Unary API In Java – Easy Steps

- gRPC Server Streaming API In Java

gRPC Load Balancing:

gRPC is a great choice for client-server application development or good alternate for replacing traditional REST based inter-microservices communication. gRPC is also faster than REST (check out gRPC vs REST performance comparison). gRPC uses HTTP2 persistent connection to improve the performance. So the client/server connection is persistent and the requests from a client will always go to the same server. This could lead to a lot of issues. When there are multiple clients, to handle the client side requests and provide high availability, we would be running multiple instances of a gRPC service. We need the ability to balance the load among all these server instances, so that a single server is not overloaded.

Lets see how to implement this using Nginx.

gRPC – Service Definition:

We need a service for this load balancing demo.

- I create a service definition as shown here.

syntax = "proto3";

package calculator;

option java_package = "com.vinsguru.calculator";

option java_multiple_files = true;

message Input {

int32 number = 1;

}

message Output {

int64 result = 1;

}

service CalculatorService {

// unary

rpc findFactorial(Input) returns (Output) {};

}gRPC – Server Side:

I implement the service as shown here.

public class UnaryCalculatorService extends CalculatorServiceGrpc.CalculatorServiceImplBase {

@Override

public void findFactorial(Input request, StreamObserver<Output> responseObserver) {

var input = request.getNumber();

// print host name

HostnamePrinter.print();

// positive int

long result = this.factorial(input);

Output output = Output.newBuilder()

.setResult(result)

.build();

responseObserver.onNext(output);

responseObserver.onCompleted();

}

private long factorial(int number){

if(number == 0)

return 1;

return number * factorial(number - 1);

}

}Just for better understanding purpose, I have the host name printer class to print host name of the service. So that we could see which instance is processing the request when we send multiple requests to the server.

public class HostnamePrinter {

public static void print(){

try {

System.out.println("Processed by host :: " + InetAddress.getLocalHost().getHostName());

} catch (UnknownHostException e) {

e.printStackTrace();

}

}

}I create a simple docker image for the above gRPC service using below Dockerfile to run multiple containers later.

FROM openjdk:11.0.7-jre-slim

WORKDIR /usr/app

ADD target/*-dependencies.jar app.jar

EXPOSE 6565

ENTRYPOINT java -jar app.jarNginx Configuration:

We would be running couple of instances of the docker containers for the above service. Lets call them grpc-server1 and grpc-server2 respectively. I create a nginx conf file with the name default.conf as shown here. Nginx would be listening on port 6565 and proxy pass the incoming request to the 2 grpc-servers.

Do note that http2 is very important in the nginx conf file for balancing the gRPC requests. Otherwise it will not work.

upstream grpcservers {

server grpc-server1:6565;

server grpc-server2:6565;

}

server {

listen 6565 http2;

location /calculator.CalculatorService/findFactorial {

grpc_pass grpc://grpcservers;

}

}Docker-Compose Set Up:

I create a docker-compose file to run the 2 instances of gRPC servers with specific name and nginx server as shown here.

version: "3"

services:

grpc-server1:

build: .

image: vinsdocker/grpc-server

hostname: server1

grpc-server2:

image: vinsdocker/grpc-server

hostname: server2

depends_on:

- grpc-server1

nginx:

image: nginx:1.15-alpine

depends_on:

- grpc-server1

- grpc-server2

volumes:

- ./nginx-conf/conf:/etc/nginx/conf.d

ports:

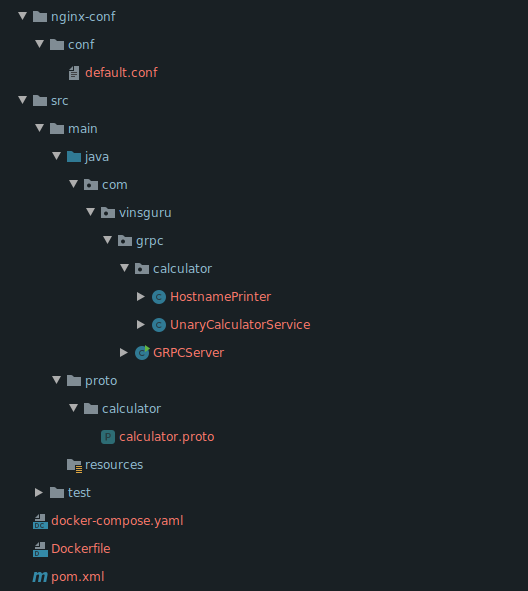

- 6565:6565- My project structure is like this.

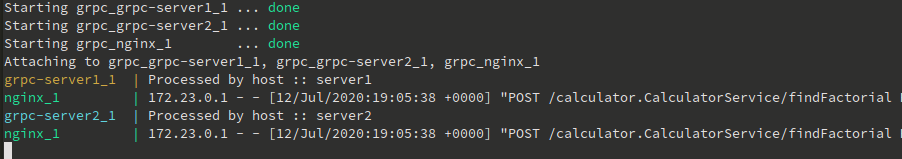

- Bring the infrastructure up by issuing below command.

docker-compose upgRPC Load Balancing Demo:

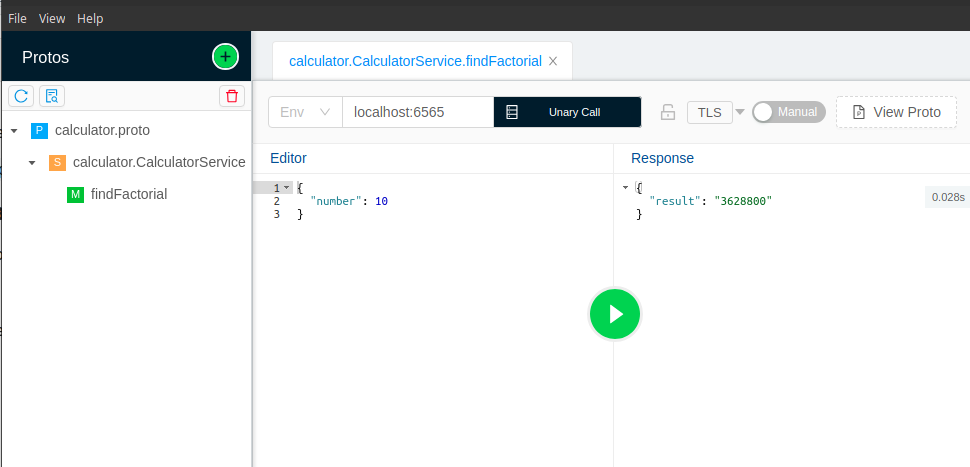

At this point, our nginx is ready to balance the load. Let’s test using a simple client / BloomRPC. Send multiple requests again and again.

We can see that our requests are balanced by nginx and both servers are processing our requests.

gRPC Course:

I learnt gRPC + Protobuf in a hard way. But you can learn them quickly on Udemy. Yes, I have created a separate step by step course on Protobuf + gRPC along with Spring Boot integration for the next generation Microservice development. Click here for the special link.

Summary:

We were able to successfully demonstrate gRPC Load Balancing using Nginx. The client does not have to have any knowledge on the back-end services. When it sends a request to one single endpoint, nginx takes care of balancing the load among the multiple servers it has.

Read more about gRPC:

The source code is available here.

Happy learning 🙂

I always get the following error when I trigger a call from BloomRPC:

{ "error": "12 UNIMPLEMENTED: Method calculator.CalculatorService/findFactorial is unimplemented" }I’m executing the fat jar (no docker here, in local).

Can you check if you have only one service here? It is not like we can have only one. But in my example, I have multiple implementations for the same service interfaces. Because of that you could get that.

Server server = ServerBuilder.forPort(6565).addService(new UnaryCalculatorService())

.build();