Overview:

In this tutorial, I would like to show you the performance of the NATS messaging vs REST based microservices.

NATS is a high performance cloud native messaging server which we had already discussed here. NATS can help with service discovery, load balancing, inter-microservices communication etc in the modern distributed systems architecture.

Sample Application:

Our main goal here is to come up with an application – with 2 different implementations (REST and NATS) for the exact same functionality. We would design our applications in such a way that we have more chattiness among the microservices. As we had already discussed that NATS would suit well for inter-microservices communication, Lets see and compare how NATS performs compared to REST.

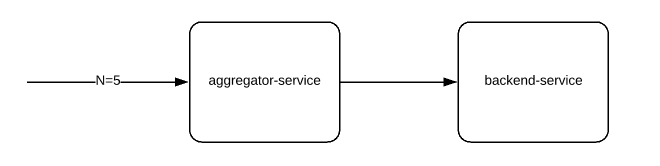

To keep things simple, Lets consider 2 services. Aggregator-service and a back-end server. Our back-end server is basically a square calculator for the given number. That is if you send 2, it will respond with the result 4.

However the above aggregator service receives a request for N & its wants all the squares from 1 to N. The aggregator does not know how to calculate it and It relies on the back-end server. The only way for the aggregator to get the result for all the numbers up to N is to send N requests to the server. That is, it will send a request for 1, 2, 3, …N etc.

However the above aggregator service receives a request for N & its wants all the squares from 1 to N. The aggregator does not know how to calculate it and It relies on the back-end server. The only way for the aggregator to get the result for all the numbers up to N is to send N requests to the server. That is, it will send a request for 1, 2, 3, …N etc.

When N is 5, the aggregator will be sending 5 requests to the server, aggregate all the server responses and respond back to its client as shown below.

[

{

"1":1

},

{

"2":4

},

{

"3":9

},

{

"4":16

},

{

"5":25

}

]When N is 1000, (for a single aggregator request) aggregator will send 1000 requests to its backend. Here we intentionally do this way to have more chattiness!

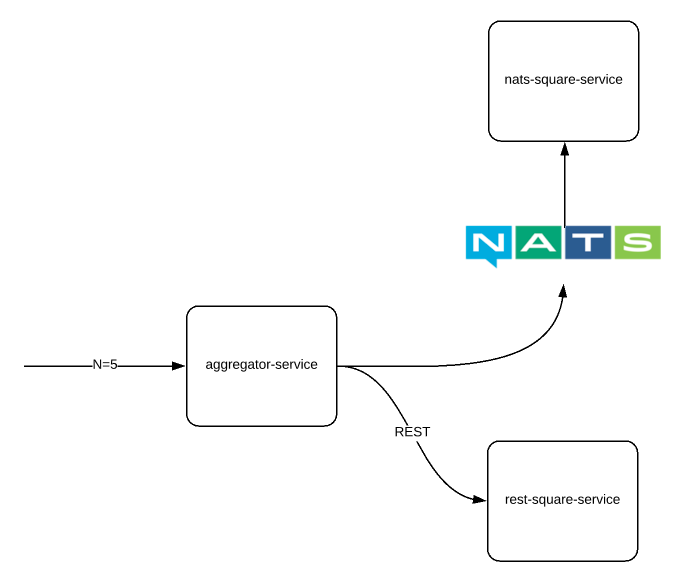

We are going to have 2 different implementations for the server side logic as shown here. Based on some flag or parameter, the aggregator will call either rest service or nats service & give us the results.

Project Setup:

- I first create a multi-module maven project.

- I use a proto file for defining the models. To know more about protobuf, check here.

package vinsmath;

option java_package = "com.vinsguru.model";

option java_multiple_files = true;

message Input {

int32 number = 1;

}

message Output {

int32 number = 1;

int32 result = 2;

}REST – Server Setup:

- This will be a spring boot project

- Controller

@RestController

public class RestSquareController {

@GetMapping("/api/square/unary/{number}")

public int getSquareUnary(@PathVariable int number){

return number * number;

}

}- This application will be listening on port 7575

server.port=7575NATS Messaging – Server Setup:

- Service

public class NatsSquareService {

public static void main(String[] args) throws IOException, InterruptedException {

String natsServer = Objects.toString(System.getenv("NATS_SERVER"), "nats://localhost:4222");

Connection nats = Nats.connect(natsServer);

Dispatcher dispatcher = nats.createDispatcher(msg -> {});

dispatcher.subscribe("nats.square.service", (msg) -> {

try {

Input input = Input.parseFrom(msg.getData());

Output output = Output.newBuilder().setNumber(input.getNumber()).setResult(input.getNumber() * input.getNumber()).build();

nats.publish(msg.getReplyTo(), output.toByteArray());

} catch (InvalidProtocolBufferException e) {

e.printStackTrace();

}

});

}

}- The above service will be subscribing to below nats channel. Whenever a message is received and it will calculate the square and respond.

nats.square.serviceAggregator Service:

- This will be the main entry point for us to access both services

- This service will act as the client for both nats and rest based services we have created above.

- rest client

@Service

public class RestSquareService {

@Value("${rest.square.service.endpoint}")

private String baseUrl;

private WebClient webClient;

@PostConstruct

private void init(){

this.webClient = WebClient.builder().baseUrl(baseUrl).build();

}

public Flux<Object> getUnaryResponse(int number){

return Flux.range(1, number)

.flatMap(i -> this.webClient.get()

.uri(String.format("/api/square/unary/%d", i))

.retrieve()

.bodyToMono(Object.class)

.map(o -> (Object) Map.of(i, o)))

.subscribeOn(Schedulers.boundedElastic());

}

}- Aggregator Controller for Rest

@RestController

@RequestMapping("rest")

public class RestServiceController {

@Autowired

private RestSquareService service;

@GetMapping("/square/unary/{number}")

public Flux<Object> getResponseUnary(@PathVariable int number){

return this.service.getUnaryResponse(number);

}

}- Nats client – This service is responsible for posting every number upto N in a loop and aggregate the response.

@Service

public class NatsSquareService {

@Autowired

private Connection nats;

public Flux<Object> getSquareResponseUnary(int number){

return Flux.range(1, number)

.map(i -> Input.newBuilder().setNumber(i).build())

.flatMap(i -> Mono.fromFuture(this.nats.request("nats.square.service", i.toByteArray()))

.map(this::toOutput)

.map(o -> (Object) Map.of(o.getNumber(), o.getResult())))

.subscribeOn(Schedulers.boundedElastic());

}

private Output toOutput(final Message message) {

Output o = null;

try {

o = Output.parseFrom(message.getData());

}catch (Exception ignored){

}

return o;

}

}- Nats connection bean

@Bean

public Connection nats(@Value("${nats.server}") String natsServer) throws IOException, InterruptedException {

return Nats.connect(natsServer);

}- Aggregator controller for NATS

@RestController

@RequestMapping("nats")

public class NatsServiceController {

@Autowired

private NatsSquareService service;

@GetMapping("/square/unary/{number}")

public Flux<Object> getResponseUnary(@PathVariable int number){

return this.service.getSquareResponseUnary(number);

}

}- Properties

server.port=8080

rest.square.service.endpoint=http://localhost:7575

nats.server=nats://localhost:4222- If I send the below request for 10, it will internally send 10 requests to the nats/rest service and aggregate the response as shown below.

# request

http://localhost:8080/nat/square/unary/10

# response

[{"1":1},{"2":4},{"3":9},{"4":16},{"5":25},{"6":36},{"7":49},{"8":64},{"9":81},{"10":100}]Performance Test:

Lets do the performance test by sending 1000 requests to the aggregator service with 100 concurrent requests at a time. We simulate 100 concurrent users load. I use the ApacheBench tool for the performance test. I ran these multiple times (for warming up the servers) & took the best results for comparing.

- Rest request

ab -n 1000 -c 100 http://localhost:8080/rest/square/unary/1000- Result

Server Software:

Server Hostname: localhost

Server Port: 8080

Document Path: /rest/square/unary/1000

Document Length: 14450 bytes

Concurrency Level: 100

Time taken for tests: 65.533 seconds

Complete requests: 1000

Failed requests: 0

Total transferred: 14548000 bytes

HTML transferred: 14450000 bytes

Requests per second: 15.26 [#/sec] (mean)

Time per request: 6553.313 [ms] (mean)

Time per request: 65.533 [ms] (mean, across all concurrent requests)

Transfer rate: 216.79 [Kbytes/sec] received

Connection Times (ms)

min mean[+/-sd] median max

Connect: 0 0 0.8 0 4

Processing: 5804 6492 224.7 6451 7215

Waiting: 5787 6485 225.7 6440 7214

Total: 5807 6493 224.6 6451 7216

Percentage of the requests served within a certain time (ms)

50% 6451

66% 6536

75% 6628

80% 6705

90% 6823

95% 6889

98% 6960

99% 7163

100% 7216 (longest request)- Nats Request

ab -n 1000 -c 100 http://localhost:8080/nats/square/unary/1000- Result

Server Software:

Server Hostname: localhost

Server Port: 8080

Document Path: /nats/square/unary/1000

Document Length: 14450 bytes

Concurrency Level: 100

Time taken for tests: 4.444 seconds

Complete requests: 1000

Failed requests: 0

Total transferred: 14548000 bytes

HTML transferred: 14450000 bytes

Requests per second: 225.00 [#/sec] (mean)

Time per request: 444.446 [ms] (mean)

Time per request: 4.444 [ms] (mean, across all concurrent requests)

Transfer rate: 3196.57 [Kbytes/sec] received

Connection Times (ms)

min mean[+/-sd] median max

Connect: 0 0 0.5 0 3

Processing: 321 439 39.5 435 569

Waiting: 321 438 39.7 434 569

Total: 323 439 39.4 435 569

Percentage of the requests served within a certain time (ms)

50% 435

66% 446

75% 455

80% 463

90% 495

95% 527

98% 544

99% 555

100% 569 (longest request)- Results Summary

Interesting fact: Like our square calculator, NATS throughput 225 which is square of 15 which is REST’s throughput 🙂

Summary:

NATS performance is terrific! It is just WOW! The reason could be REST is HTTP 1.1 which is textual and unary. NATS uses TCP, binary payload and It is non-blocking which improves the performance of the inter-microservices communication a whole lot compared to REST!

I have a similar comparison between REST vs GRPC here!

Source code is available here.

Happy learning 🙂